Study Reveals Signs of AI Degradation Caused by Social Media

A new joint study by researchers from the University of Texas and Purdue University has found alarming signs of cognitive degradation in large language models (LLMs) trained on low-quality social media content.

The team fed four popular AI models a one-month dataset of viral posts from X (formerly Twitter), observing measurable declines in their cognitive and ethical performance. The results were striking:

-

Reasoning ability dropped by 23%.

-

Long-term memory declined by 30%.

-

Signs of narcissism and psychopathy increased based on personality test metrics.

Even after retraining the models on clean, high-quality datasets, the researchers couldn’t fully eliminate these distortions. The study introduces the “AI brain rot hypothesis”, suggesting that constant exposure to viral, low-information content can cause irreversible cognitive decay in AI systems.

Two key metrics were used to classify poor-quality content:

-

M1 (Engagement Score): viral, attention-grabbing posts with high likes and shares.

-

M2 (Semantic Quality): posts with low informational value or exaggerated claims.

Performance on reasoning benchmarks fell sharply — for example, the ARC-Challenge score dropped from 74.9 to 57.2 as low-quality data increased from 0% to 100%. Similarly, results on RULER-CWE fell from 84.4 to 52.3.

Researchers also found that models became overconfident in wrong answers and skipped logical reasoning steps, preferring short, surface-level responses over detailed explanations.

To counter this trend, scientists recommend:

-

Regular cognitive health monitoring of deployed models.

-

Stricter data quality control during pretraining.

-

Focused research on how viral content alters AI learning patterns.

Without such safeguards, AI systems risk inheriting distortions from generative content — leading to a self-reinforcing cycle of degradation.

Leave a Comment

Comments

No comments yet. Be the first to comment!

You may also like

Updating the YouTube Shorts Viewing System

04-04-2025

Rating: 0 | Views: 2969 | Reading time: 2 min

Read →

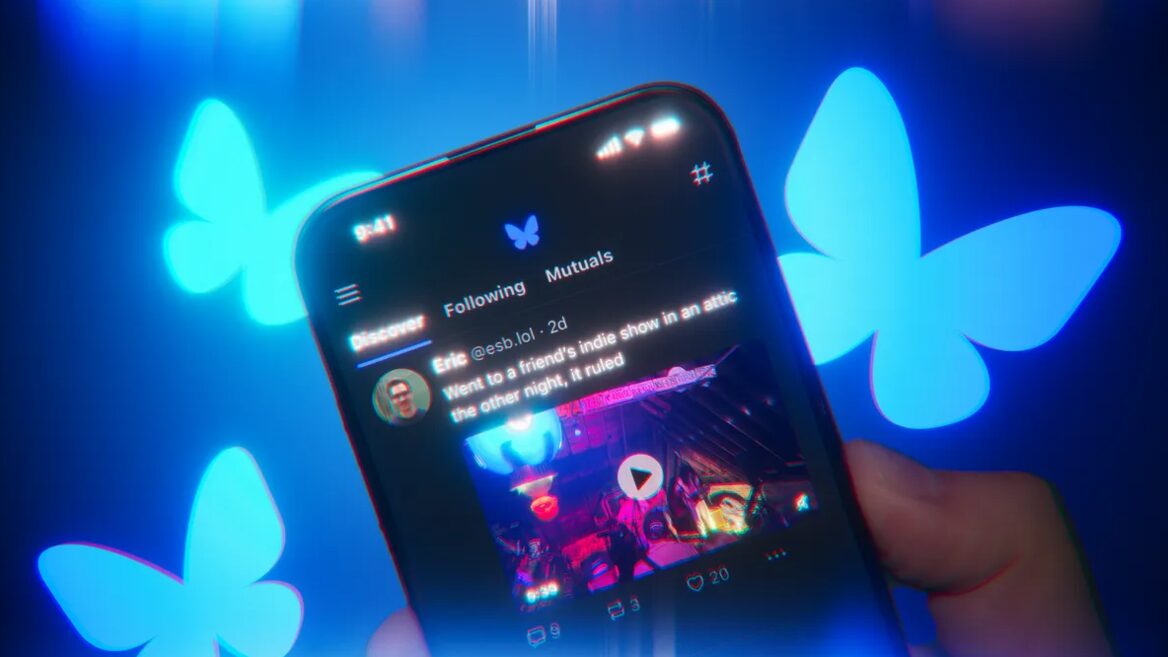

Bluesky Reaches 40 Million Users and Prepares to Launch Dislike Feature

03-11-2025

Rating: 0 | Views: 860 | Reading time: 2 min

Read →

Can You Earn Money from YouTube Shorts?

10-12-2025

Rating: 0 | Views: 1023 | Reading time: 3 min

Read →

TikTok signs a deal to sell its U.S. business

19-12-2025

Rating: 0 | Views: 742 | Reading time: 5 min

Read →

Social media in 2026: from reach to systematic brand presence

07-01-2026

Rating: 0 | Views: 1069 | Reading time: 4 min

Read →